IOzone lets you benchmark your filesystem performance, seeing how well record IO occurs for files of various sizes. With IOzone you can see more detailed information than the read, write, and rewrite figures that Bonnie++ reports. IOzone is great at detecting areas where file IO might not be performing as well as expected.

IOzone is available for openSUSE 10.3 as a 1-Click install, in multiverse for Ubuntu Hardy, and is in the standard Fedora 9 repositories.

The simplest way to invoke IOzone is using the -a option to select full automatic mode, with the -g option to extend the maximum file size to be twice your system's main memory size:

$ time iozone -a -g 4G >|/tmp/iozone-stdout.txt

The size specified with any option can have the m or g (not case-sensitive) postfix to specify units of megabytes or gigabytes. Redirecting the standard output to a file allows you to generate graphs from the output without having to copy data from the terminal. Wrapping the invocation with the time command lets you know how long a full invocation took, so you are aware of how long IOzone took to execute and possibly tailor future invocations to specific areas of interest to avoid waiting for all the tests to be performed. The above command took a few hours to complete on a hardware parity RAID over six 750GB drives.

You can use the -n option to specify the minimum file size that the automatic mode will use during testing. Normally testing will start with 64KB files and increase the size by doubling it each iteration. Using -n can save some time and generate more targeted benchmarks if you are interested in only larger files that cannot possibly fit into the system buffer caches. You can use the -s option instead of -g to only test files of a specific nominated size. The below command will test only using files of 4GB in size and records from 4KB to 256KB in size.

$ time iozone -a -s 4G -q 4 -y 256 >|/tmp/iozone-stdout.txt

The tests performed by IOzone measure many things, starting with the simpler metrics such as read, write, reread, and rewrite performance. Read and write are obvious. Reread is measures how well a system caches a file that was recently read. There are two rewrite tests; the one reported as simply rewrite overwrites an existing file. The record rewrite writes to a specific location in a file over and over again.

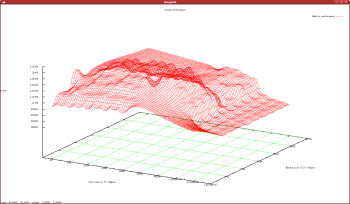

Figure 1 -Click to enlarge The normal read and write tests are performed by sequentially getting and putting data to a temporary file. There are also random read and write tests, which obtain or write to little pieces of the temporary file instead of reading or writing it sequentially. If you are running IOzone on a filesystem created on a RAID, then the stride read test (see -j) might be interesting. The stride read test can show you if there is a performance penalty for reading records which are not aligned to your RAID stripe boundary. For example, in a four-disk RAID-5, data is split into chunks (perhaps of 64KB in size) and written over three disks with parity written on the fourth. So at the start of the disk 1 you have chunk 1, disk 2 starts with chunk 2, disk 3 with chunk 3, and finally disk 4 contains the parity of chunks 1-3. Chunks 1-3 are called a RAID stripe. Sometimes the parity chunk is included in the stripe too. The order of chunks and parity is changed in each stripe, maybe for the second stripe putting the parity on disk 1 and the chunks 4-6 on disks 2-4. Because the parity has to be updated when any chunk in a stripe is changed, varying where the parity is stored helps even out the IO across all of the disks in the RAID. Typically, applications try to access records at chunk or stripe boundaries. IOzone's stride parameter can be used to test performance of read requests which are and are not aligned to a RAID stripe. The strided read test reads records at a given stride offset (number of bytes apart), so you might like to set the stride to some whole multiple of your RAID chunk size. There are also versions of read, write, rewrite, and reread that are performed using the buffered fread() and fwrite() calls. These tests might show if your C library is causing some performance bottlenecks.

Figure 1 -Click to enlarge The normal read and write tests are performed by sequentially getting and putting data to a temporary file. There are also random read and write tests, which obtain or write to little pieces of the temporary file instead of reading or writing it sequentially. If you are running IOzone on a filesystem created on a RAID, then the stride read test (see -j) might be interesting. The stride read test can show you if there is a performance penalty for reading records which are not aligned to your RAID stripe boundary. For example, in a four-disk RAID-5, data is split into chunks (perhaps of 64KB in size) and written over three disks with parity written on the fourth. So at the start of the disk 1 you have chunk 1, disk 2 starts with chunk 2, disk 3 with chunk 3, and finally disk 4 contains the parity of chunks 1-3. Chunks 1-3 are called a RAID stripe. Sometimes the parity chunk is included in the stripe too. The order of chunks and parity is changed in each stripe, maybe for the second stripe putting the parity on disk 1 and the chunks 4-6 on disks 2-4. Because the parity has to be updated when any chunk in a stripe is changed, varying where the parity is stored helps even out the IO across all of the disks in the RAID. Typically, applications try to access records at chunk or stripe boundaries. IOzone's stride parameter can be used to test performance of read requests which are and are not aligned to a RAID stripe. The strided read test reads records at a given stride offset (number of bytes apart), so you might like to set the stride to some whole multiple of your RAID chunk size. There are also versions of read, write, rewrite, and reread that are performed using the buffered fread() and fwrite() calls. These tests might show if your C library is causing some performance bottlenecks.

IOzone provides many options to control how data is read and written during the benchmark, so you can produces IO requests in a manner close to that of the application you intend to run on the machine. The options are described in alphabetic order in the IOzone documentation, but I have grouped them by their semantics in the following paragraphs.

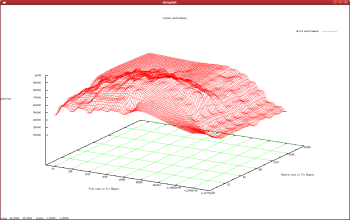

Figure 2 - Click to enlarge This first grouping of options relates to which API IOzone uses to perform the benchmarking. Memory-mapped files can be convenient to use because they do not require programs to explicitly execute read() functions to get file data; instead, data is loaded automatically when memory locations are accessed. However, programmers using memory-mapped files need to understand that there are potentially large seek delays when accessing large memory-mapped files with random IO patterns. There is no cut-and-dried rule as to when memory-mapped files should be used by applications, and the API does not change the performance limitations of the filesystem backing the IO.

Figure 2 - Click to enlarge This first grouping of options relates to which API IOzone uses to perform the benchmarking. Memory-mapped files can be convenient to use because they do not require programs to explicitly execute read() functions to get file data; instead, data is loaded automatically when memory locations are accessed. However, programmers using memory-mapped files need to understand that there are potentially large seek delays when accessing large memory-mapped files with random IO patterns. There is no cut-and-dried rule as to when memory-mapped files should be used by applications, and the API does not change the performance limitations of the filesystem backing the IO.

The -B option causes files to be accessed through the memory-mapped APIs. You can use -D to specify that data should be transferred by the operating system asynchronously for these memory-mapped files. The -G option expressly specifies synchronous memory-mapped files be used. Applications that perform memory-mapped IO can tell the kernel of their access patterns using the madvise system call. This allows the operating system kernel to tailor caching to match how the application has specified its access patterns to be. You can use the special -+A option to tell IOzone how it should madvise the kernel during tests performed using memory-mapped IO. The -H and -k options use POSIX async IO calls during the benchmark, and -H will also perform a copy from the memory buffer that was used by the operating system to perform the async IO request. Both -H and -k accept the number of async operations that should be attempted at any time as their argument. The -E option will use the pread API during benchmarks, the pread() call operates on file descriptors like the read() API but includes the offset in the file that data is to be read from.

Another collection of options deal with how benchmarks are measured. The -c option includes the close() call in the time taken for the benchmark. If you are running on a filesystem that might delay committing changes to disk until a close() call, such as NFSv3, using the -c option should give you a fairer impression of filesystem performance. The -e includes a flush call in the benchmark. The exact call made to flush data to disk is dependent on which IO subsystem you are using; for example, when benchmarking with file descriptors the fsync call will be used. Flushing is important in benchmarking because many database systems perform flushes to ensure that writes are on disk. The -o option opens file with the O_SYNC synchronous mode set, causing all writes to be flushed to disk immediately.

IOzone by default uses the current directory as the target filesystem for benchmarking. You can specify another location using the -f or -F options. -F works like -f but allows you to specify multiple locations for when you are testing multiprocess or multithreaded filesystem performance. The -U option specifies the mountpoint that the filesystem you are testing is located on. Specifying -U makes IOzone unmount and remount a specified filesystem between each test to flush any filesystem buffers.

The -j option sets the stride of file reads used for the stride read test. Each record read by IOzone in the stride read test will be separated by stride bytes. The -q and -y options specify the minimum and maximum record size used in automatic testing mode. You can use the -r option to specify a single record size that should be tested instead of the range from -q to -y. The parameter you pass to -j is multiplied by the record size, so if you pass in your RAID stripe size and use a range of record sizes using the above options you will be able to test aligned and unaligned performance and see both results on a single graph.

You can cherry-pick which tests are performed during benchmarking using a sequence of -i options. Unfortunately the -i option accepts numeric input values rather than allowing more human-readable options to be passed in directly. Most of the tests you can select rely on the write (-i 0) tests having been performed so that files have been created and initialized by IOzone. For example, to perform only read (1) and write (0) tests, use the -i 0 -i 1 options. The numbers for each test are shown in the output of IOZone --help.

You can run benchmarks with multiple processes by specifying the -t throughput mode and specifying the number of threads or processes to create. The -T option causes POSIX pthreads to be used to create the multiple threads for throughput testing. Being able to nominate the use of pthreads instead of processes allows you to benchmark IO with IOzone using the multithreading/multiprocessing model that the application you are planning to execute uses. You can specify the minimum number of processes with the -l option and the maximum with the -u option.

There are also options such as -d and -Jb for inserting delays at various stages during the benchmark.

Interpreting the results

IOzone reports results on stdout in a tabular format starting with the smallest files you have nominated to create (64KB by default for -a automatic mode) up to the largest files. For each file size, your nominated record size range is tested from smallest to largest record size.

The IOzone package comes with scripts to create nice graphs using gnuplot given the tabular output from IOzone. The gnuplot scripts are called Generate_Graphs and gengnuplot.sh. Generate_Graphs calls gengnuplot.sh multiple times to generate a graph for each test that IOzone performs in its benchmark and then runs gnuplot to show each of these graphs and generate PostScript output at the same time. Generate_Graphs uses the gnu3d.dem file to drive the gnuplot operations. You can easily customize this file to generate PNG bitmap images of each graph and not display the graphs interactively. The changes to gnu3d.dem to non-interactively generate PNG files are shown below:

set zlabel "Kbytes/sec"

set data style lines

set dgrid3d 80,80,3

#splot 'write/iozone_gen_out.gnuplot' title "Write performance"

set terminal png

set output "write/write.png"

splot 'write/iozone_gen_out.gnuplot' title "Write performance"

#pause -1 "Hit return to continue"

Shown in the screenshot in Figure 1 is the rewrite performance of a hardware parity RAID using a 256KB chunk size across six 750GB disks on a range of file sizes from 64KB to 4GB performed on a machine with 2GB of main memory. The benchmark was performed using the first iozone command shown above. Notice how performance is better before the record size exceeds 256KB. This is most likely because the RAID could perform a read-modify-write cycle for changes that are smaller than the chunk size. The dip in the front of the graph is due to smaller record size tests not being performed for larger files by the -a option.

For the same hardware and filesystem setup the write performance is shown in Figure 2. One thing that sticks out in the benchmark is the little dip on the plane for the larger records. This happens for file sizes of about 16KB and records between 256KB and 4MB. This might have been an anomaly that occurred during data collection, or there might be a component in the system that does not perform as well for that file and record size. Notice that for larger files the smaller record size performs much better until the file size becomes too large for the system cache (around the label starting with 4.19 toward the right of the file size axis) and the performance of all record sizes becomes much closer.

Wrap up

IOzone allows you to specify a range of file sizes to operate on and a range of record sizes to use for testing in those files. Along with this you can choose which operating system API calls are used to perform the IO in the tests, letting you select the API that is closest to the application you wish to improve IO performance for. The ability to generate a 3-D graph of the read, write, rewrite, and other performance statistics lets you see if there are areas of IO that the system as a whole is not handling well. For example, some RAID controllers might handle record sizes below a certain cutoff better than other record sizes and with IOzone you will be able to see this drop in performance on the graph.

IOzone lets you dig into IO performance beyond the single read, write, and rewrite figures that Bonnie++ reports. It can help you see the trends in IO performance as you vary file size, record size, the API used to issue IO requests, and other parameters. Bonnie++ gives a good indication of performance in a fairly short test run; once you are happy with your Bonnie++ figures, you can execute IOzone, which may take perhaps two to four hours to expose finer details of your IO performance.